Students:

- Jonah Eggenkemper |Linkedin

- Carlos Caro Hernández |Linkedin

- Mohamed Khedr| Linkedin

- Kjeld Vermeulen|Linkedin

(2022)

Introduction

Ter Laak Orchids is one of the leading orchid producers in Europe. They grow, treat, and sell about 8 million orchids a year in their modern industrialized greenhouses. In their 17.5 ha of greenhouses plant illnesses pose a serious threat that needs to be controlled and monitored. Due to the large scale of production about 5000 plants die every week – due to illnesses or multiple other reasons.

Current situation

Ter Laak already knows the total of plant loss during production, but they want to know how many plants die from a specific disease/cause.

At this moment, Ter Laak Orchids immediately throws away all ill/dead plants’ pots to combat contamination. This results in a loss of data and product improvement.

The problem

Ter Laak wants to know how many, and which plants die from a specific disease/cause. The information about the plant is printed on the plant’s pot. In addition to that, a large share of the pots also has fluorescent data matrix codes on them, which are linked to the printed information.

Ter Laak wants to gather the pots information in combination with the information about the disease/cause. A human reading the pots information would be slow. Especially considering the sheer number of dead plants each week, it would need a full-time employee to cumulate the information. Therefore, Ter Laak requires an automated solution with minimal human intervention that should scan 5000 pots in 8 hours. The plant pots are collected all around the factory and should be sorted by the disease/cause by the employees that recognizes a dead/ill plant.

Our solution

Our solution comprises of a robot with a custom designed end of arm tool, a lightbox with the camera, one input tray and one output tray. The trays provide multiple holes for plant pots with a diameter 12 and 9 cm. The employee that operates our scanning machine will collect the stacks of pots sorted by disease from all over the factory and will insert all pots of one disease type into the holes of the input tray. They will select the disease type on a touchscreen and start the automated scanning process from there.

The robot picks one pot after another from the stacks of the input tray. Then, it shows the data matrix printed on the pot to a camera that recognizes the information and saves the data in a file. To avoid bad light conditions, the pot is introduced inside a lightbox in which the camera is located, and the data matrix lights up under UV light.

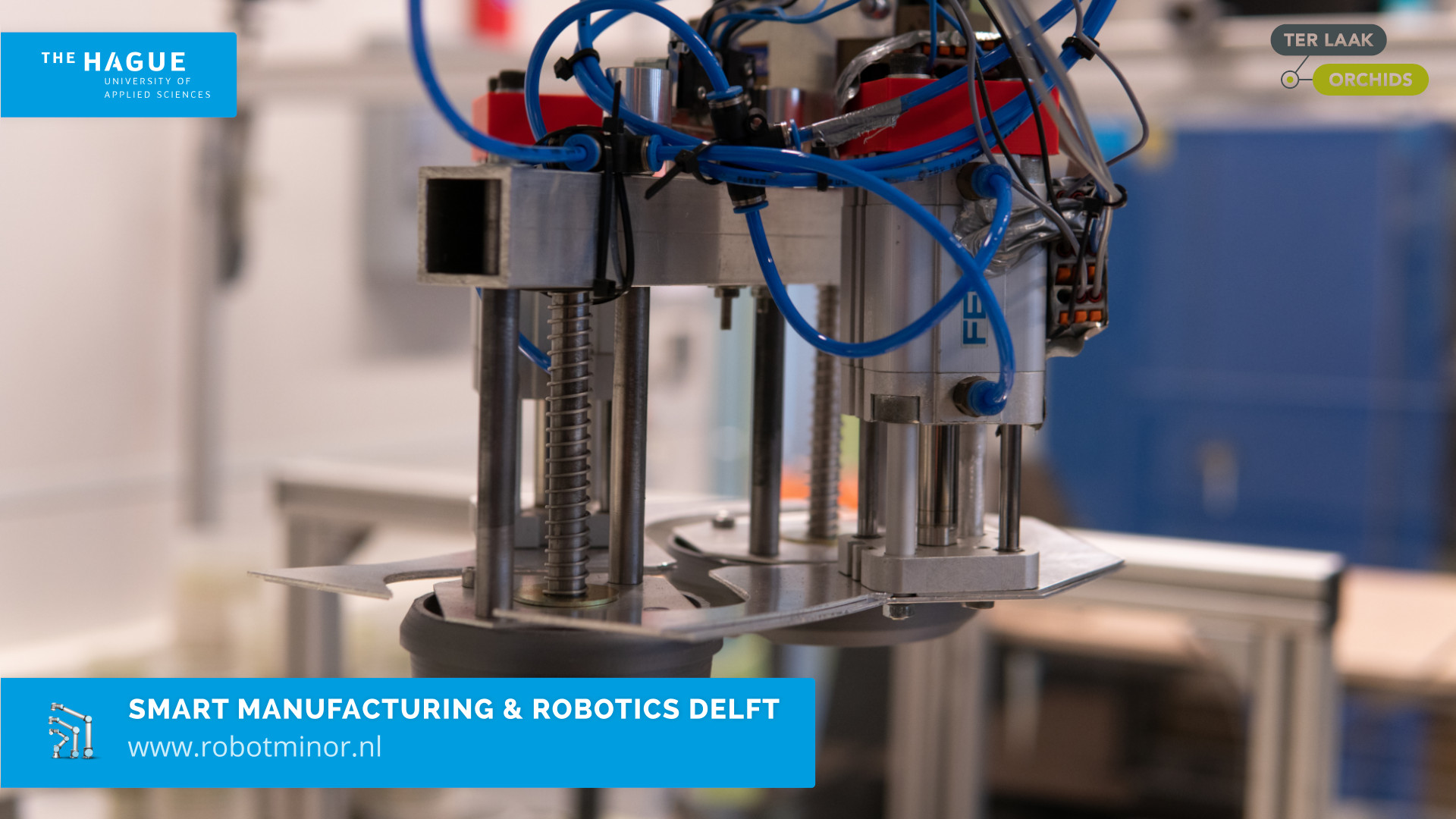

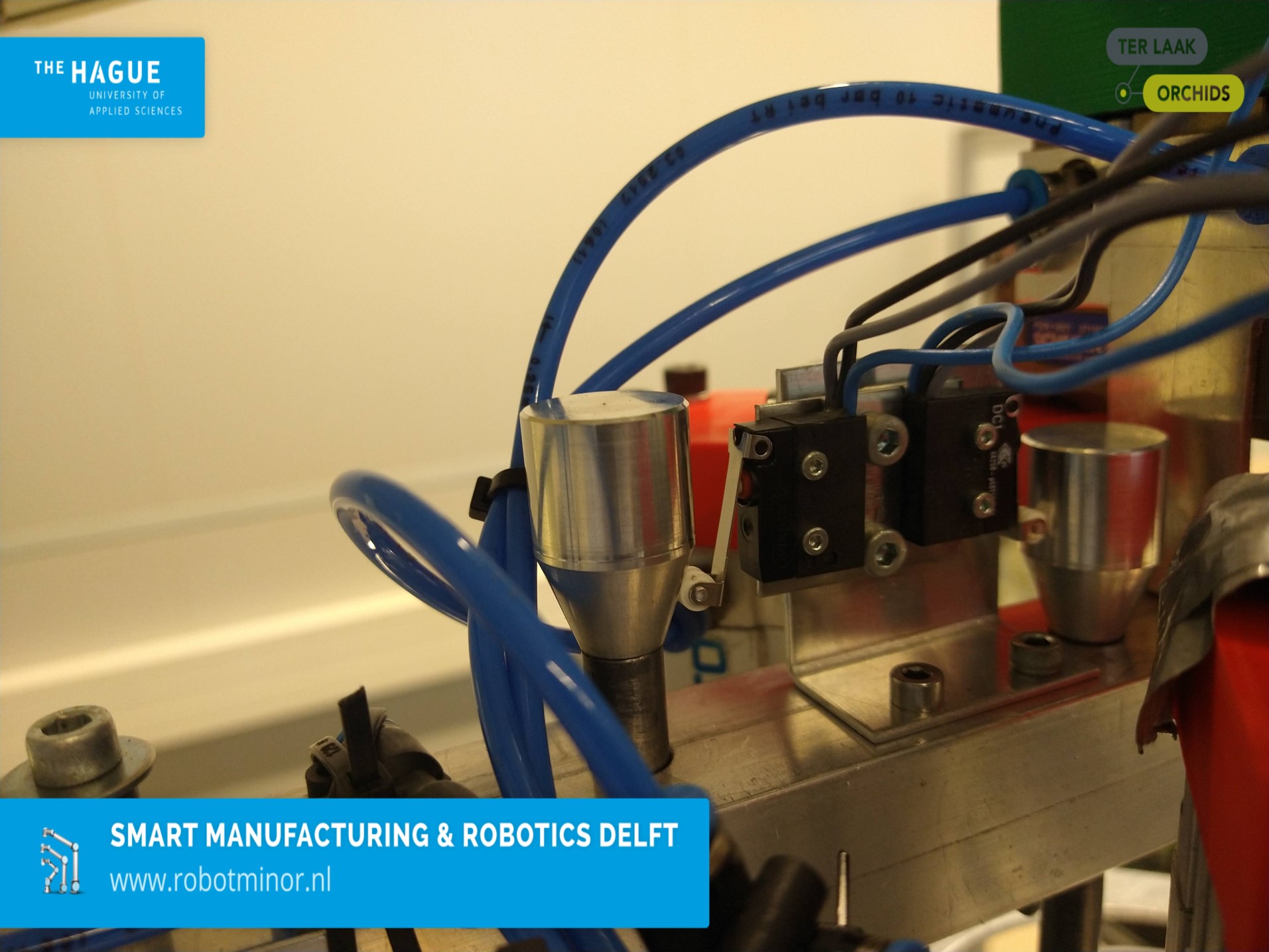

The end of arm tool consists of the gripper that moves with the help of compressed air with two pistons that widen two sheets of metal so a single pot can be separated by fitting between them. We also have 3D-printed pieces to assemble the different components of the gripper.

Finally, the pot is left on another tray, creating stacks.

Major decisions

A major decision with this project was the way the information is encoded on the pots. Some text is printed with ink on them, but it was hard for the optical character recognition (OCR) software to read it quickly and accurately, so we decided to change to data matrices. For the pots which already have these matrices, it was OK, but for the other ones, we pasted in paper some data matrices to them.

Another important decision was regarding the gripper design. We tried many ideas until finding one that could pick the pots. As some of the pots can be broken, not all the ways of grabbing them are feasible. The way of unstacking the pots was also a challenge. The final decision was that we mounted two metal sheets that surrounded the pots on their edges and two rounded endings (one for the big and one for the small pots) that fit within their diameter. Then, two pistons push a pot upwards from its edge. The movement compresses a spring that closes the limit switch. The unstacking and grabbing were going to be different mechanisms at the beginning.

We also had the problem of detecting a pot on a stack so the robot can go and pick it up. For the assembly explained previously, when a limit switch is pressed, the robot knows it has detected the bottom of a pot. But how does it know it is not the end of the tray? We solved this by giving the height of the tray as the maximum distance to go down to in the code.

A highlight from this project is the manufacturing of our own trays as the ones given to us had the holes very close and the robot was unable to get around the stacks without being disturbed by an adjacent one.

Conclusion

We delivered on the goal of building a robot that can scan and pick and place plant pots. After trying OCR, we switched to data matrices for improved speed and accuracy.

The total speed of the robot can still be improved further.

Acknowledgements

We would like to thank Ewald de Koning and Roy van Rosmalen from Ter Laak Orchids for their help.

Our gratitude extends also to Thijs Brilleman and Mathijs van der Vegt for their expertise and support during the project.