The company Colruyt deals with over 350 different types of crates which must be unloaded from a carrier on a conveyor belt. Each carrier can contain 40-50 crates. Workers must unload these carriers which is a very unergonomic method. Colruyt would like to have this (partially) automated.

Workers must lift heavy crates in high or low locations making it a physically demanding and dangerous job. Our goal is to make this job safe for the workers and to take the heavy load of their shoulders. With a co-working space, the robot can do all the physically demanding work.

The project we created is a robot arm that is able to detect crates and place them onto the conveyor. Since the crates are stacked onto a carrier by customers, their position can be very random. For this reason our solution will not be able to pickup all the crates and it is meant to be used as a very strong co-worker that never gets tired.

We developed the project at the Hague university, Delft campus. The main parts of the project are the end of arm tool, computer vision, HMI and machine learning discussed in detail below.

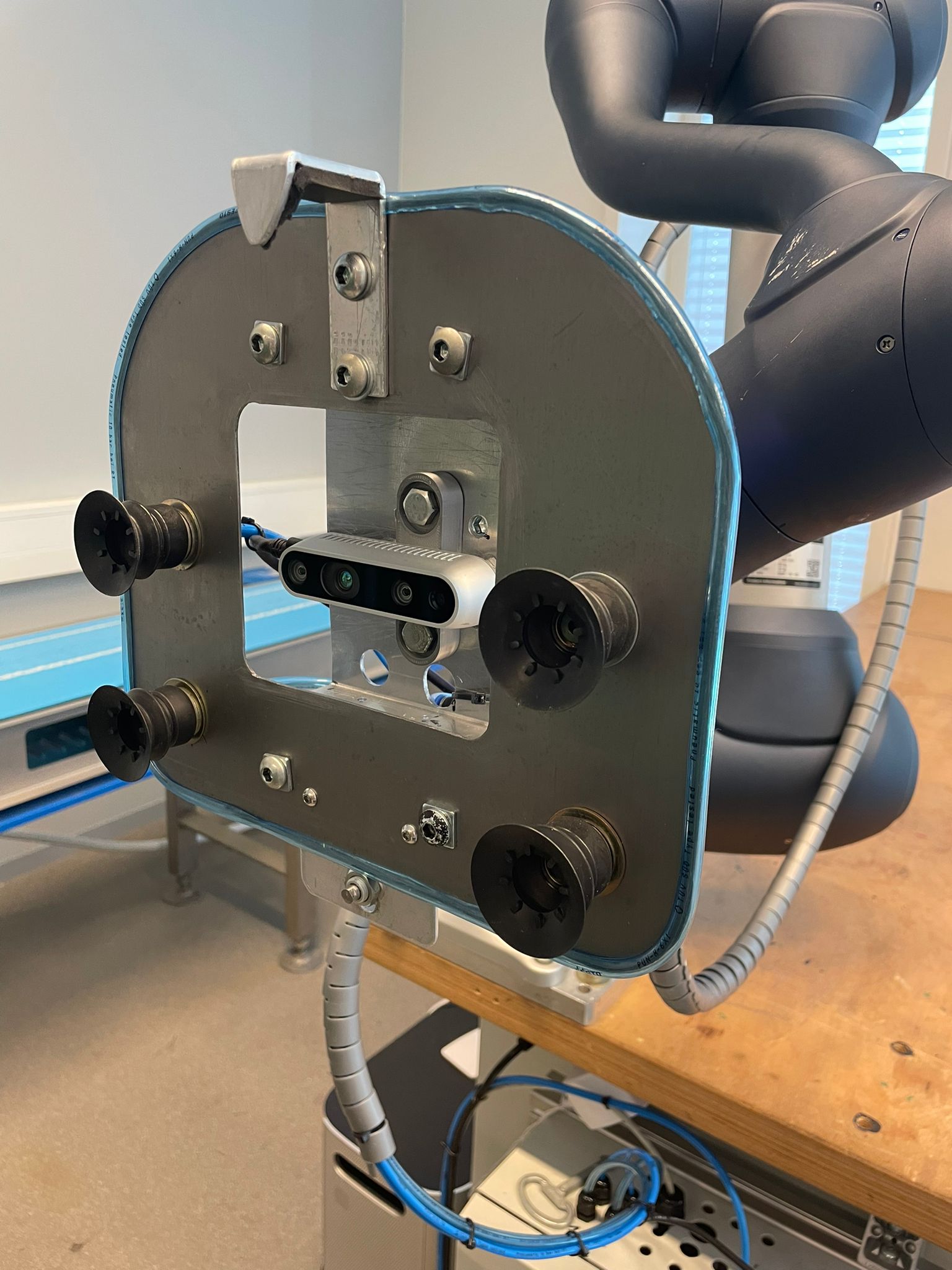

Our solution picks up and places crates by: Scanning in front of the carrier with the depth camera that is mounted to the end of the arm, taking a picture, the AI model detects the crates and our program decides which crate it will pick-up first. The robot moves to the selected crate and takes a second picture to extract location coordinates and rotation of the crate. The robot arm starts the pick-up sequence and places it on the conveyor belt. The End-of-arm-tool (EOAT) uses suction to pick and place.

One of the major decisions regarding our machine learning was to train the model for the crate detection with mix of real and digitally created and rendered images. We used these rendered images to train the model since we were not able to get a large enough dataset using exclusively real images. The digitally created images also give us more flexibility with the lighting and colors we use so that the AI model is not overfit to the lighting we had.

The objective of computer vision is to know what the computer is ‘seeing’. Using OpenCV libraries and Intel Realsense D435 depth camera, our system outputs a binary image of a crate with pixel coordinates that are translated to real world coordinates, in which the robot movement may interact with. Using the Realsense viewers built in calibration feature which optimises both the depth value and pixel coordinates was essential to the accuracy of pick up. The ‘point of pick up’ was found by finding the top edge of crate contours and from this aligning the centre of crates to Tool Centre Point (TCP) location. The binary image is created using image manipulation functions such as thresholding, binarizing and masks. The computer vision is linked to the machine learning algorithm by using the detected crates bounding boxes to isolate the image manipulation to within this frame.

Regarding the EOAT we had promising results with our first prototype with suction knobs. During the whole project we redefined our scope for the project because of the robot arm we used in the lab. The robot payload was 10 kg

and a full crate weight around 15 kg so for the proof of concept we could only use empty beer crates even though our EOAT was able to lift a whole beer crate filled with bottles. We are also using 2 venturi turbines so that we have separate airflow between the left and the right suction cups, this way if one side fails, the other side will still hold the crate in place.

and a full crate weight around 15 kg so for the proof of concept we could only use empty beer crates even though our EOAT was able to lift a whole beer crate filled with bottles. We are also using 2 venturi turbines so that we have separate airflow between the left and the right suction cups, this way if one side fails, the other side will still hold the crate in place.

The design choice for using a robot arm from Doosan Robotics was since it supports force control. With the RoboDK API we were not able to control the speed of the robot. This was only possible within the software but not through the API. The Homberger Hub which we used in our final setup had its own problems as well. There is not that much documentation on the internet for this device and robot arm. The setup of a simple HMI is good and fast but, in our case, we needed to communicate to an AI model and an external camera. It was very time consuming to make this work and costed us a lot of time. Although it was an acceptable solution considering our timeframe.

For the detection of the crates we use machine learning with a Yolov5 model. The model is trained on a mix of real images and rendered images so that we could get a large as possible dataset with different lighting so that it is as adaptable as possible and not overfit to the room we created the prototype.

Group Members: